Overview

This project entails the development of a Smart Audio Recognition Model, executed on a Cortex-M4 microcontroller, designed to activate home devices via sound recognition. The model is integrated with the EFR32xG24 System-on-Chip (SoC) from Silicon Labs, which serves as the core processing unit for audio inputs and device control. The Smart Audio Recognition Model is trained to accurately detect and interpret voice commands, enabling users to interact with their smart home appliances through voice control. You can find the full project on my github.

The EFR32xG24 SoC serves as the core component for processing audio inputs and executing appropriate actions based on the recognized commands. The integrated Smart Audio Recognition Model, trained for high accuracy, ensures reliable detection and interpretation of voice commands.

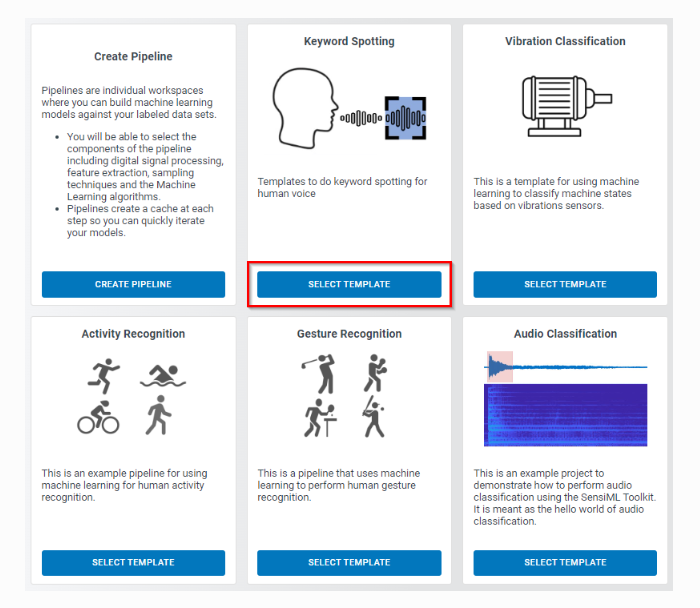

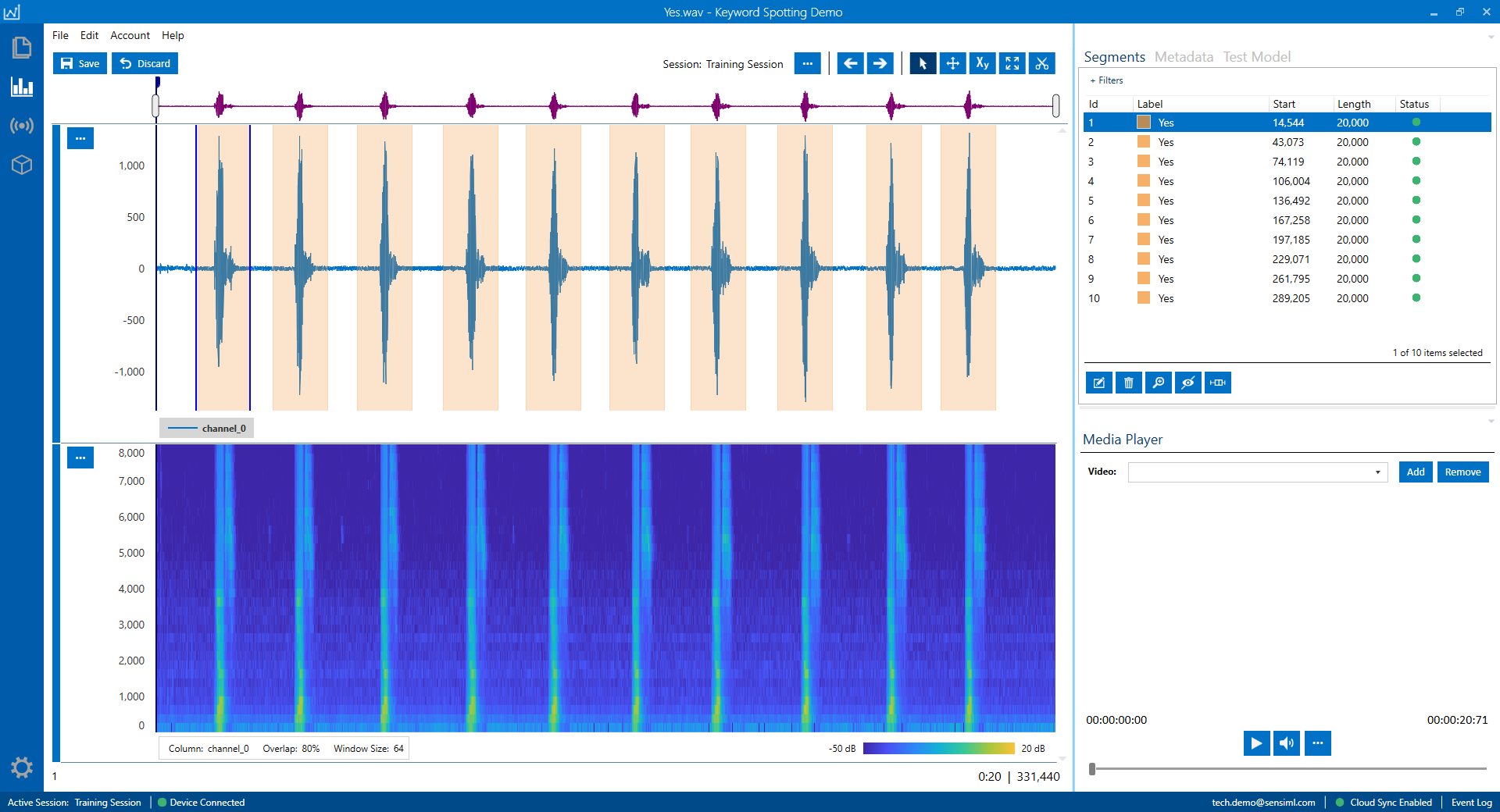

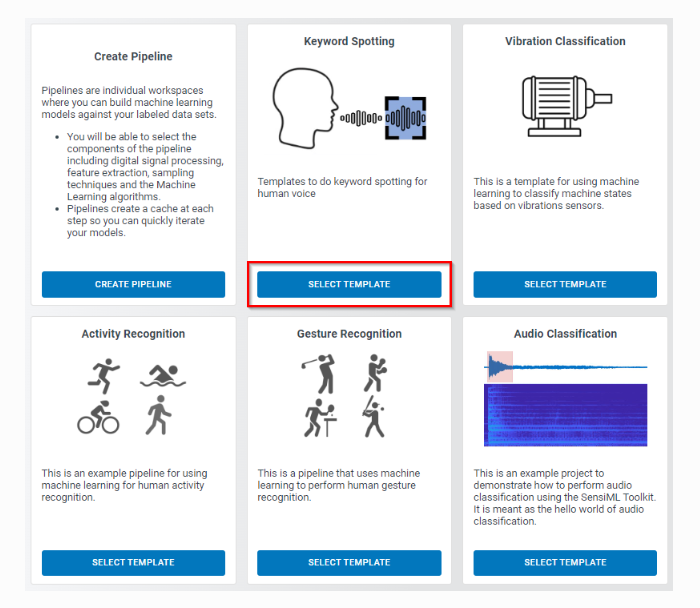

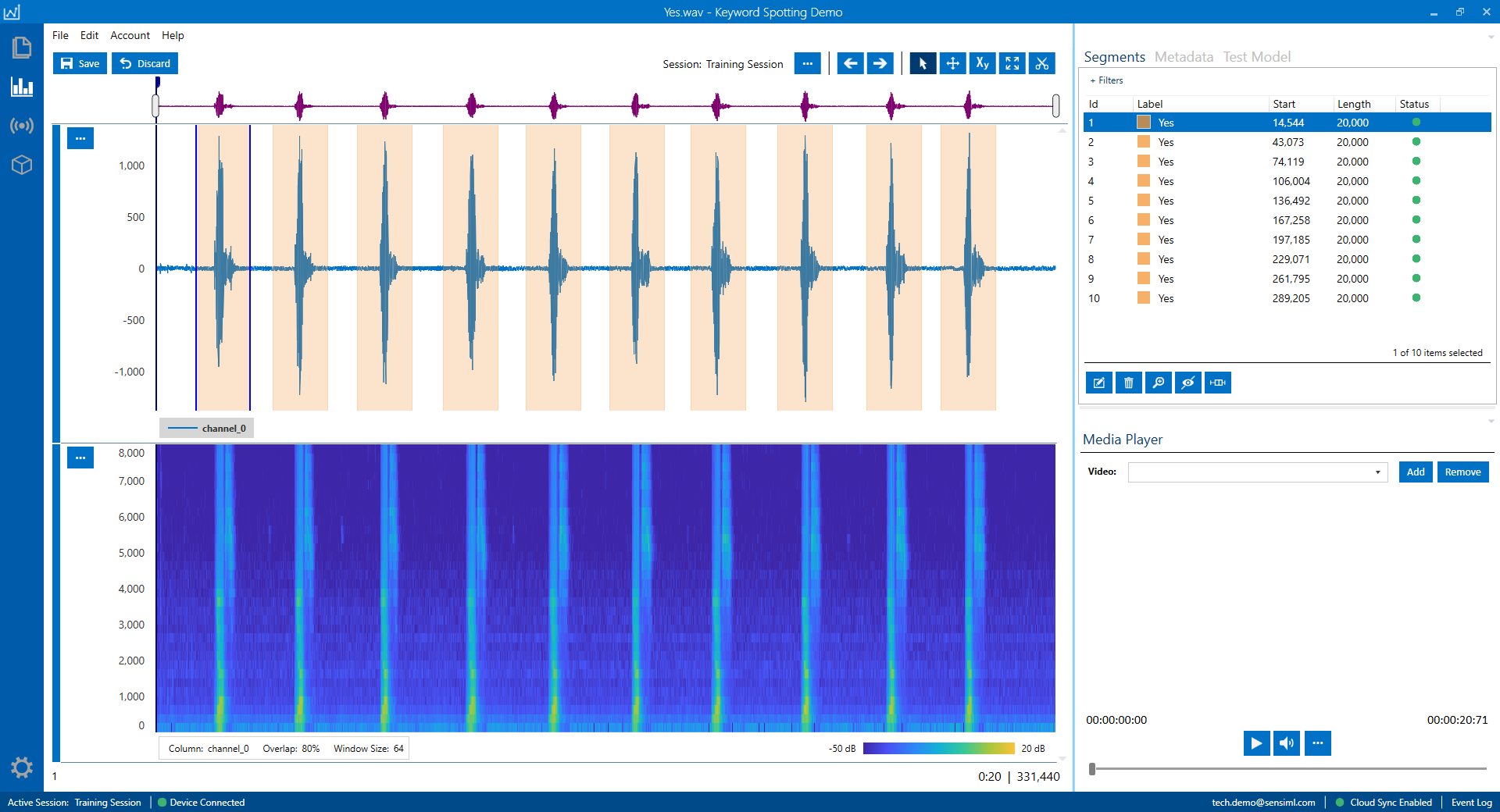

The SensiML Analytics Toolkit is employed to capture and annotate audio data, while the TensorFlow package is used to create a model deployed and tested on the edge device.

A Smart Audio Recognition Model in conjunction with the EFR32xG24 System-on-Chip (SoC) enables sound-activated control of home devices.

This cutting-edge technology provides a seamless, hands-free experience for users managing their home devices, elevating the functionality and overall user experience of smart home systems. This allows users to interact with their smart home appliances through voice commands, enhancing convenience and accessibility.

Hardware

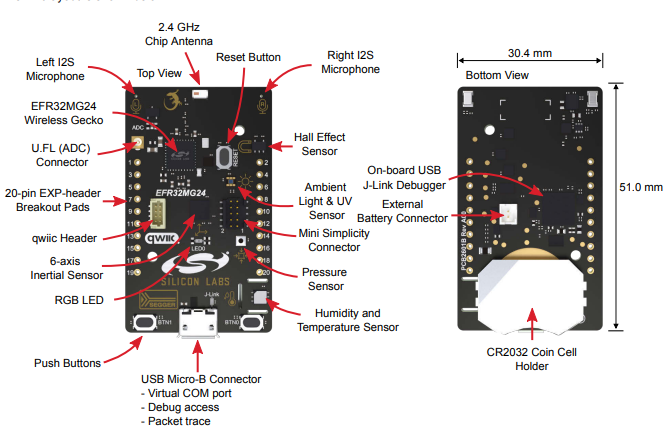

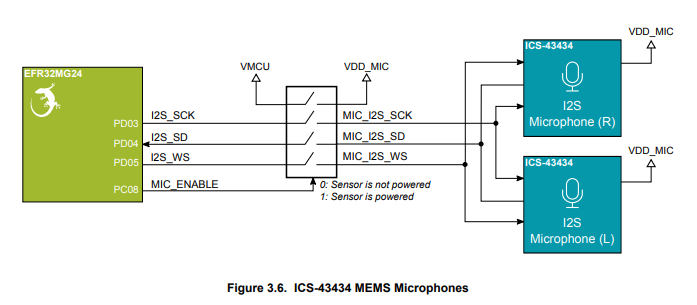

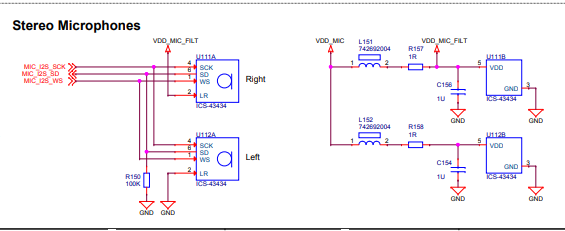

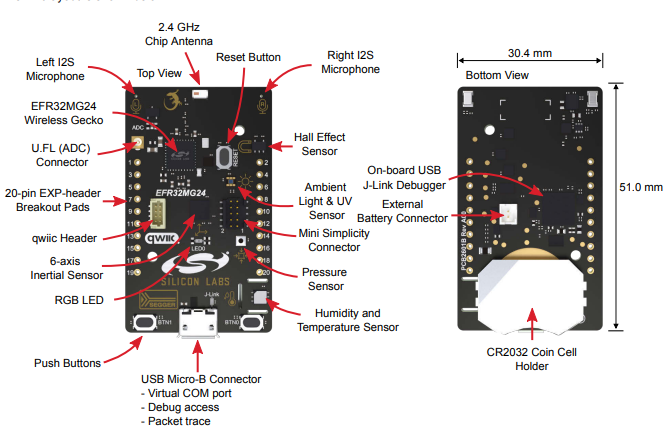

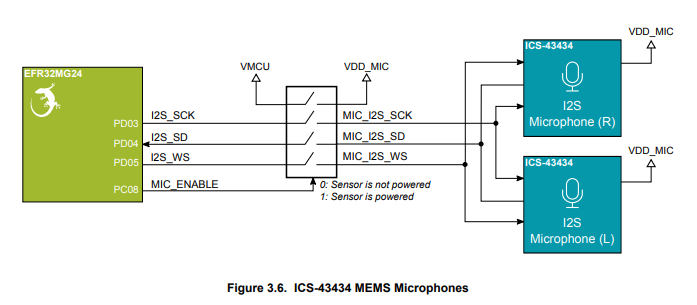

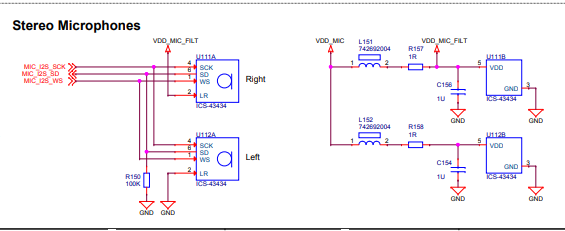

This project uses the EFR32xG24 Dev Kit Board with the onboard IMU and I2S microphone sensor to take audio and motion measurements and send data via serial UART.

The xG24 Dev Kit is a compact and feature-rich development platform designed to support the EFR32xG24 wireless System-on-Chip (SoC) from Silicon Labs. This platform provides a quick and efficient way to develop and prototype wireless IoT products, with features including:

- Support for +10dBm output power

- Integration of a 20-bit Analog-to-Digital Converter (ADC)

- Access to key features such as the xG24’s AI/ML hardware accelerator

- Low-cost, small form factor design for easy prototyping

- Support for various 2.4GHz wireless protocols, including BLE, Bluetooth mesh, Zigbee, Thread, and Matter

The kit includes a radio board that acts as a complete reference design for the EFR32xG24 wireless SoC. Additionally, the development platform offers an onboard J-Link debugger with a Packet Trace Interface and Virtual COM port, enabling streamlined application development and debugging of the attached radio board and external hardware. For more information take a look at the datasheet and schematic

With the xG24 Dev Kit, developers have access to a comprehensive set of tools needed to create scalable, high-volume 2.4GHz wireless IoT solutions, leveraging the capabilities of the EFR32xG24 SoC.

Software

This IoT application involves capturing data from an I2S Microphone and an SPI IMU (Inertial Measurement Unit) connected to a device running a bare-metal configuration, and then transferring the captured data to a Python script over a virtual COM port (VCOM) at a baud rate of 921600.

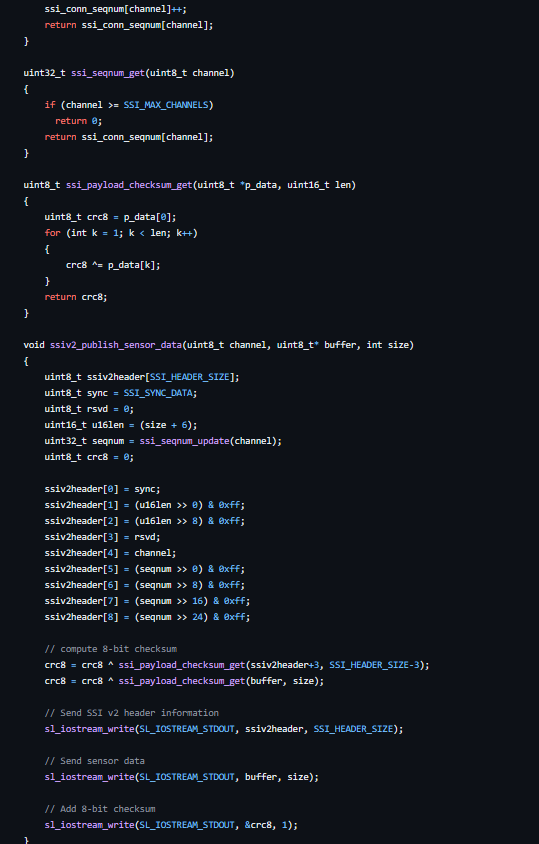

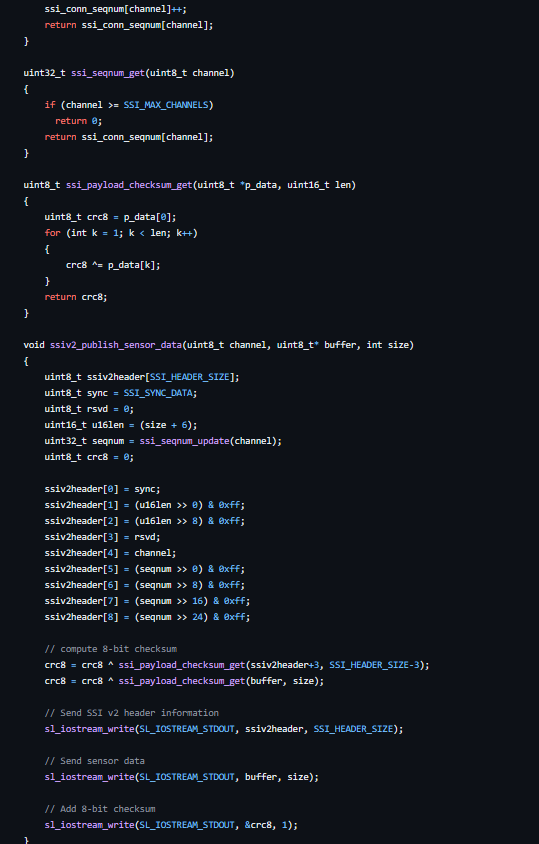

Microphone capture firmware - comms file: ensures reliable data transmission in the SSI v2 format, which is essential for communication between devices and systems

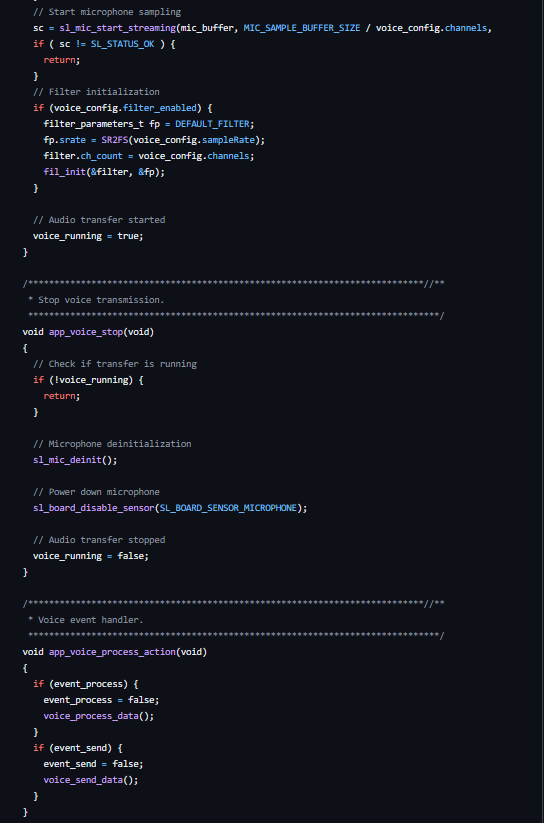

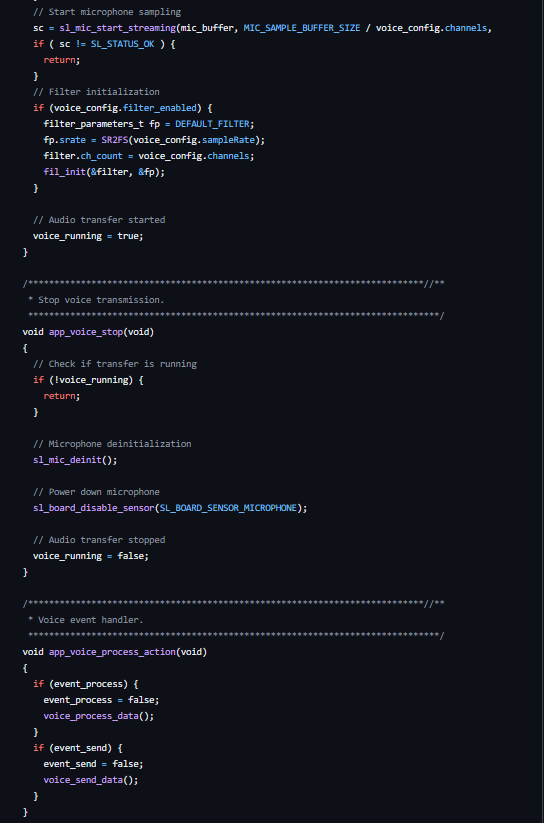

Microphone capture firmware - handling voice transmission

Microphone capture firmware - handling voice transmission

The script then stores the data in two different file formats:

- WAV file for audio data: The captured audio data from the I2S Microphone is stored in a Waveform Audio File Format (WAV), which is a standard format for storing digital audio data. WAV files provide high-quality, uncompressed audio suitable for further processing, analysis, or playback.

- CSV file for IMU data: The captured IMU data, which includes measurements from sensors like accelerometers, gyroscopes, and magnetometers, is stored in a Comma-Separated Values (CSV) file. CSV files are a simple and commonly used format for storing tabular data, making it easy to analyze and process the sensor data in various applications, such as spreadsheet software or data analysis tools.

The example project utilizes the following key software components:

- I/O Stream Service: This service enables the transfer of data between the device and the Python script through the virtual COM port.

- Microphone and IMU Component Drivers: These drivers handle the communication and data retrieval from the I2S Microphone and SPI IMU, respectively.

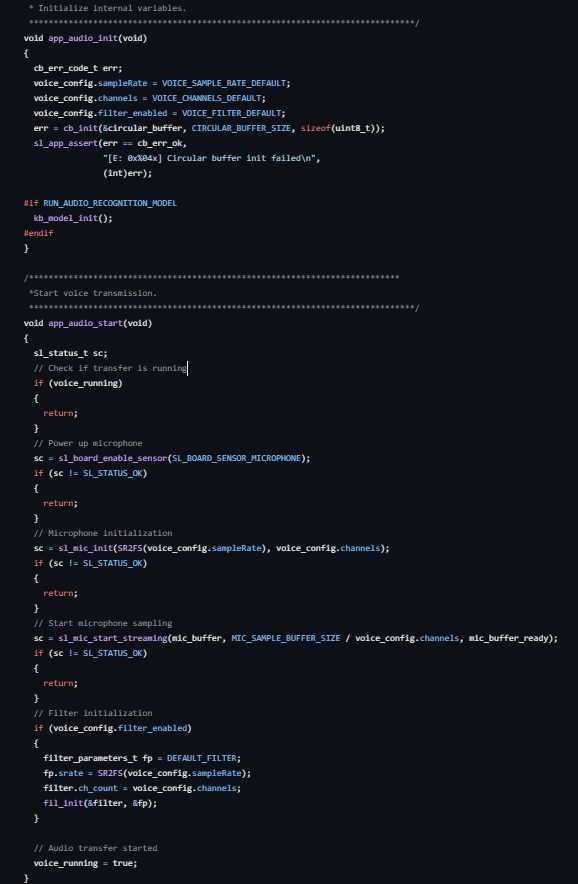

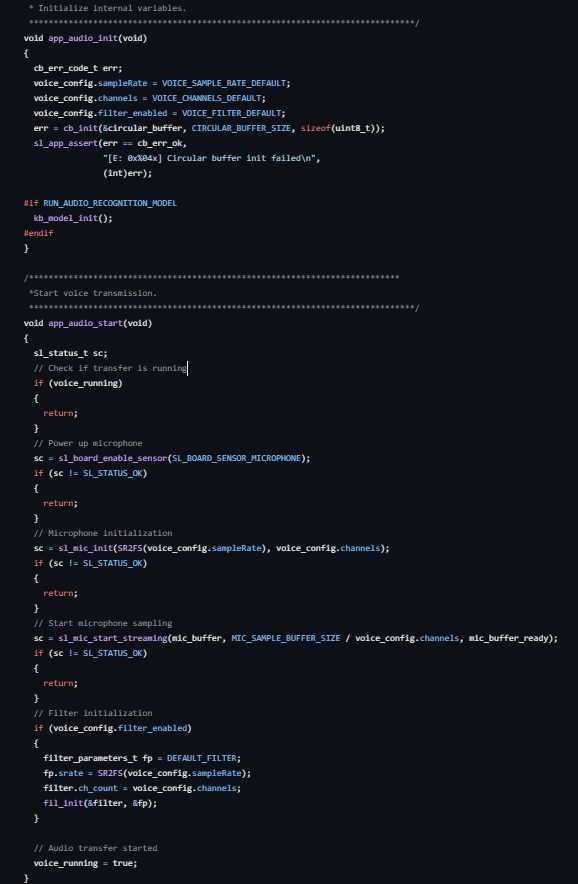

This code is responsible for setting up and starting the audio capture and transmission process, potentially including optional features like audio filtering and recognition, depending on the specific configuration.

This code is responsible for setting up and starting the audio capture and transmission process, potentially including optional features like audio filtering and recognition, depending on the specific configuration.

Overall, this IoT application demonstrates a simple yet effective way to capture and store sensor data for further analysis or processing, showcasing the capabilities of the hardware and software components involved.

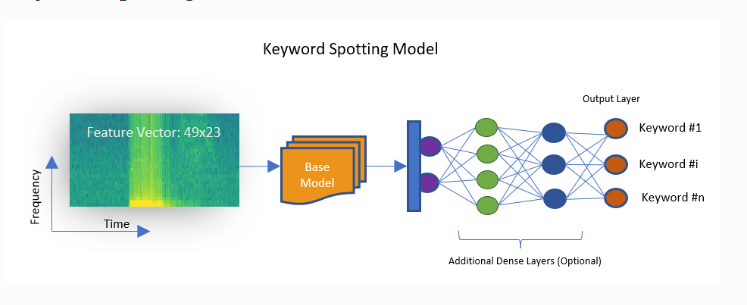

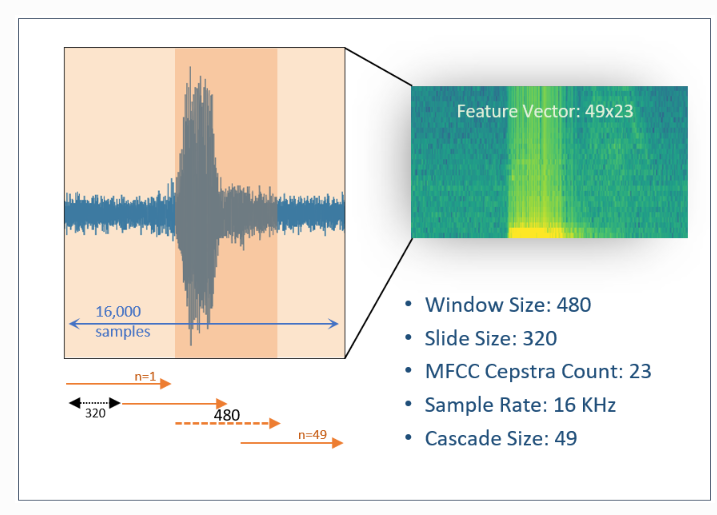

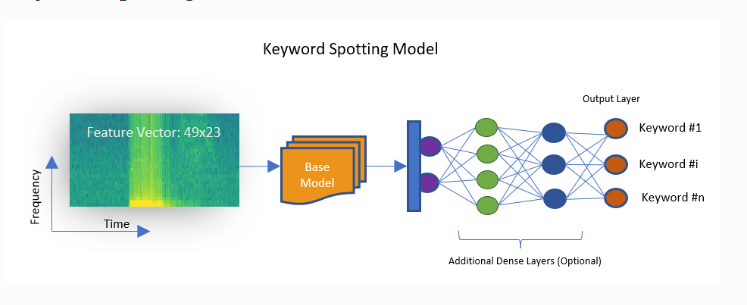

Training

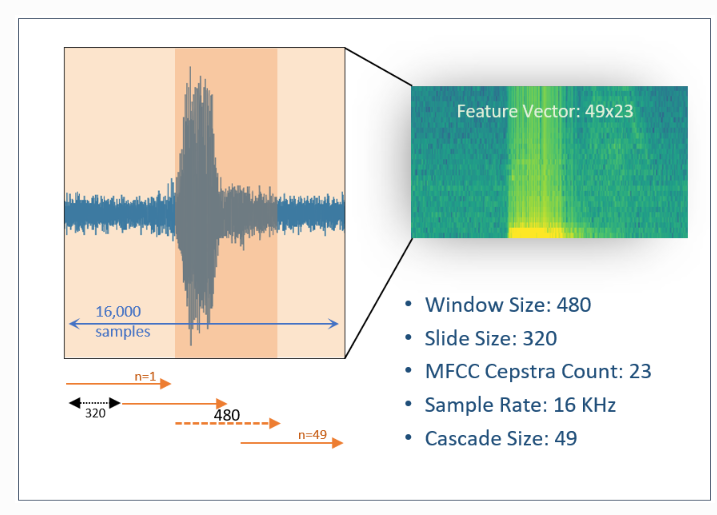

The training model portion of this project focuses on building a robust keyword spotting system using machine learning techniques. Our model is designed to identify specific keywords within 1-second audio segments, consisting of 16,000 samples at a 16 kHz rate. To ensure the best possible performance, we recommend using 20,000 samples per segment, with each 16,000 subsegment covering a significant part of the audio event.

The model creation process involves the following steps:

- Prepare Data: Define a query to extract relevant data from the annotated dataset for training purposes.

- Build Model: B Set up the model generator pipeline and optimize the keyword spotting model using the provided data. This step includes preprocessing the annotated data and employing it in training and cross-validation tasks.

- Explore Model: Assess the model's performance using summary metrics and visualizations.

- Test Model: Run the generated classifier on a separate, unused test dataset to evaluate its real-world performance. The test dataset should have the same characteristics as the training/validation data, such as ambient noise levels and diversity of subjects.

To customize the training process for your dataset, consider tweaking the following parameters:

- Dense Layers: Add additional dense layers to improve model performance, particularly for large projects with numerous keywords or similar-sounding words.

- Training/Validation Size Limit: Control the maximum number of randomly selected samples per label in each training epoch to prevent excessively long training times for large datasets.

- Batch Normalization: Employ this technique to increase network stability, reduce overfitting risk, and enable faster training.

- Dropout: Implement this regularization technique to randomly drop a fraction of layer outputs during training, promoting model robustness and reducing overfitting.

- Data Augmentation Techniques: Enhance the feature vector by altering its dimensions or incorporating feature vectors from similar projects. This can improve the model's ability to generalize and adapt to unseen data.

By carefully configuring these parameters and following the provided guidelines, you can create a high-performing keyword spotting model tailored to your specific application.

Application

The development of the Voice-Activated Smart Lock system showcases the potential of integrating cutting-edge technologies like machine learning and advanced microcontrollers to create innovative and secure home automation solutions.

By leveraging the capabilities of the EFR32xG24 SoC and Cortex-M4 microcontroller, this project successfully demonstrates the ability to train and deploy models that enable voice command recognition on edge devices. The outcome is an intelligent, user-friendly, and secure access control system that paves the way for further advancements in smart home security.

This versatile sensor device, designed to be installed near a door's deadbolt, ensuring enhanced security. Moreover, the board serves as a valuable tool for developing a range of audio classification algorithms, including key unlocking, industrial sound recognition, and sound-activated smart home devices, highlighting its adaptability and potential for diverse applications.

Overall, this project serves as a testament to the power and versatility of modern embedded systems and their capacity to enhance our daily lives.

Elements

Text

This is bold and this is strong. This is italic and this is emphasized.

This is superscript text and this is subscript text.

This is underlined and this is code: for (;;) { ... }. Finally, this is a link.

Heading Level 2

Heading Level 3

Heading Level 4

Heading Level 5

Heading Level 6

Blockquote

Fringilla nisl. Donec accumsan interdum nisi, quis tincidunt felis sagittis eget tempus euismod. Vestibulum ante ipsum primis in faucibus vestibulum. Blandit adipiscing eu felis iaculis volutpat ac adipiscing accumsan faucibus. Vestibulum ante ipsum primis in faucibus lorem ipsum dolor sit amet nullam adipiscing eu felis.

Preformatted

i = 0;

while (!deck.isInOrder()) {

print 'Iteration ' + i;

deck.shuffle();

i++;

}

print 'It took ' + i + ' iterations to sort the deck.';

Lists

Unordered

- Dolor pulvinar etiam.

- Sagittis adipiscing.

- Felis enim feugiat.

Alternate

- Dolor pulvinar etiam.

- Sagittis adipiscing.

- Felis enim feugiat.

Ordered

- Dolor pulvinar etiam.

- Etiam vel felis viverra.

- Felis enim feugiat.

- Dolor pulvinar etiam.

- Etiam vel felis lorem.

- Felis enim et feugiat.

Icons

Actions

Table

Default

| Name |

Description |

Price |

| Item One |

Ante turpis integer aliquet porttitor. |

29.99 |

| Item Two |

Vis ac commodo adipiscing arcu aliquet. |

19.99 |

| Item Three |

Morbi faucibus arcu accumsan lorem. |

29.99 |

| Item Four |

Vitae integer tempus condimentum. |

19.99 |

| Item Five |

Ante turpis integer aliquet porttitor. |

29.99 |

|

100.00 |

Alternate

| Name |

Description |

Price |

| Item One |

Ante turpis integer aliquet porttitor. |

29.99 |

| Item Two |

Vis ac commodo adipiscing arcu aliquet. |

19.99 |

| Item Three |

Morbi faucibus arcu accumsan lorem. |

29.99 |

| Item Four |

Vitae integer tempus condimentum. |

19.99 |

| Item Five |

Ante turpis integer aliquet porttitor. |

29.99 |

|

100.00 |

This code is responsible for setting up and starting the audio capture and transmission process, potentially including optional features like audio filtering and recognition, depending on the specific configuration.

This code is responsible for setting up and starting the audio capture and transmission process, potentially including optional features like audio filtering and recognition, depending on the specific configuration.